Machine learning workloads are increasingly deployed at the network edge to meet latency requirements, preserve data privacy, and reduce bandwidth consumption from transmitting raw sensor data to cloud data centers. However, edge infrastructure presents fundamental challenges for ML deployment: devices span an enormous range of computational capabilities from powerful GPU-equipped servers to resource-constrained embedded systems; modern neural network models often exceed the memory and compute capacity of individual edge devices; and dynamic conditions including user mobility, network variability, and resource availability require adaptive deployment strategies. Traditional approaches either deploy full models to powerful edge servers (excluding resource-constrained devices) or run all inference in the cloud (reintroducing latency and bandwidth concerns that motivated edge deployment). Distributing ML workloads across edge-cloud infrastructure while maintaining acceptable inference latency and adapting to heterogeneous device capabilities remains a central systems challenge.

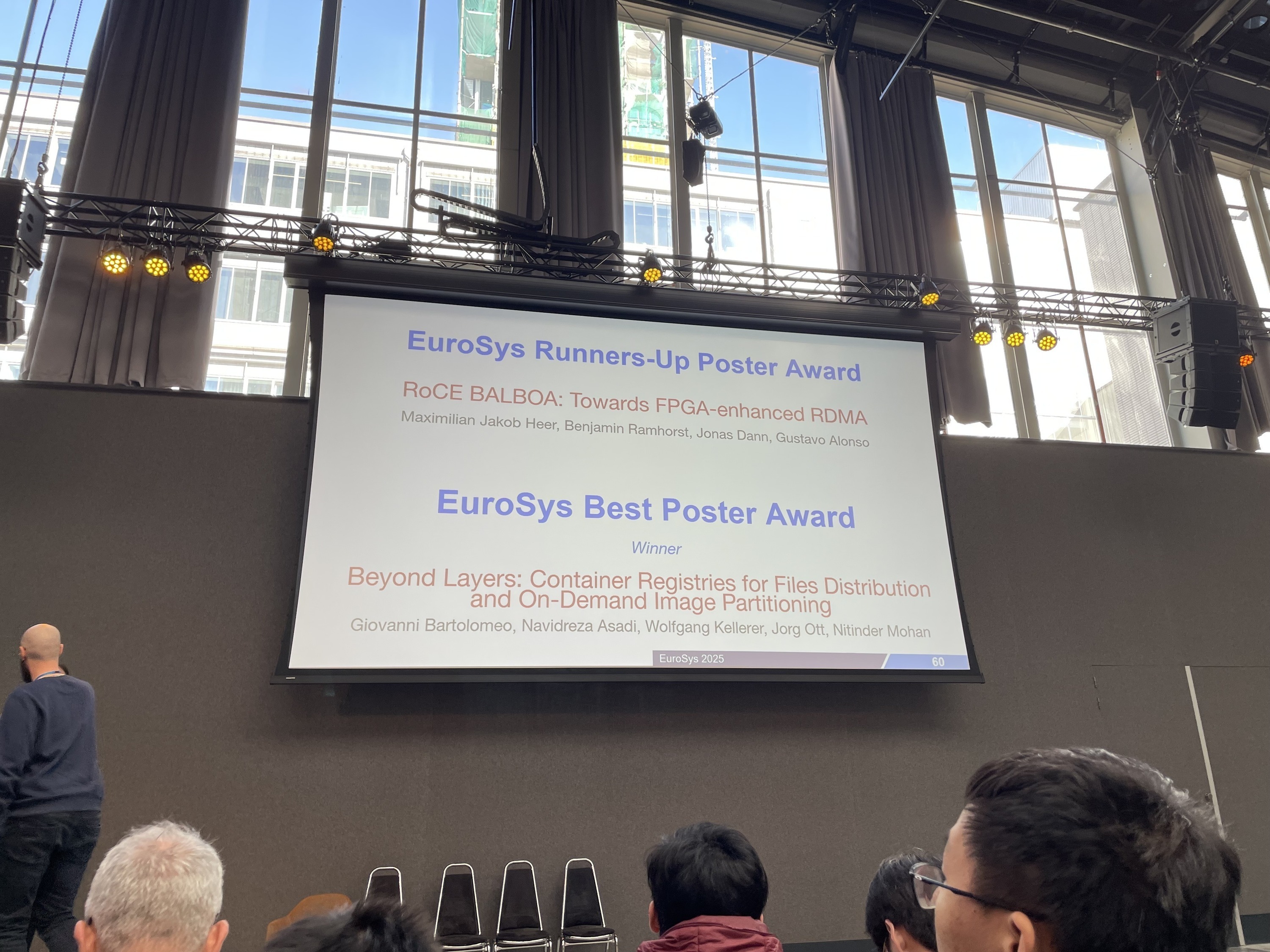

Our research addresses systems challenges in deploying and managing ML workloads at the edge. We develop automatic container partitioning techniques that analyze application structure, identify optimal partition points, and generate separate container images for distributed execution across edge and cloud without requiring manual application restructuring. This enables deploying models that exceed individual device capabilities while reducing wide-area network traffic by processing data locally and transmitting only intermediate representations. We explore orchestration strategies for heterogeneous edge infrastructure that consider device capabilities (CPU, GPU, specialized accelerators), network conditions, application latency requirements, and energy constraints for battery-powered devices. Our work integrates ML deployment with edge orchestration frameworks, providing ML-aware scheduling, model registry and versioning systems, and performance monitoring. We investigate model lifecycle management including deployment optimization (quantization, pruning, knowledge distillation), runtime adaptation to changing conditions, and continuous model updates. Research directions include energy-aware inference strategies, security and privacy in distributed ML deployments, model-hardware co-design for edge devices, and explainability techniques with limited computational resources. Our EdgeAI research connects closely with our edge orchestration work, with techniques integrated into open-source orchestration platforms such as Oakestra.